FB Vice President Yann LeCun talks about "issues required for machine learning" (modern business) --Yahoo! News

delivery

1 comment 1photo by getty images

---------- Mr. Yann LeCun, Vice President of Facebook and father of deep learning, talked about the past, present, and future image of AI and its core "deep learning", the best seller. "Deep Learning Learning Machine" has reached a circulation of 100,000 in France. Let's take a look at what to read from this exciting book written by Mr. Lucan. ---------- [Image] Supercomputer used by the Japan Meteorological Agency!Aim of big data utilization

Future and challenges of AI research

photo by getty images

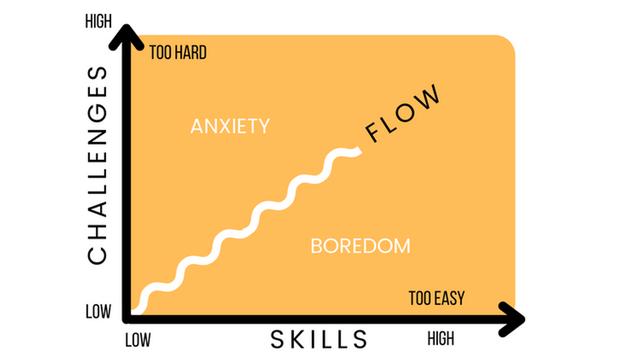

So far, even the best AI systems are far from the human brain. Far from being human, it is less intelligent than cats. The cat's brain contains 760 million neurons and 10 trillion synapses. Not even dogs, which are closely related to cats and have 2.2 billion neurons. The human brain has 86 billion neurons, which consume about 25 watts. It is impossible to design and build a machine with comparable performance. As we saw in Chapter 1 (Editor's Note: "Deep Learning Learning Machines", "Chapter 1 AI Revolution"), even if we understand the learning principle of the brain and unravel its structure, its behavior is reproduced. To do this, a tremendous amount of computing power is required, with the number of operations per second being approximately 1.5 x 10¹⁸. Today's GPU cards are capable of 10¹³ operations per second and consume about 250 watts of power. To get the same performance as the human brain, you need a huge computer with 100,000 of these processors. The power consumption of this computer reaches at least 25 megawatts. In other words, it wastes one million times more energy than the human brain. AI researchers at Google and Facebook deal with this level of total computing performance, but it's a daunting task to integrate thousands of processors in a single task. There are tons of problems to solve scientifically. So is the technical problem. We have been constantly striving to push the boundaries of our current system. Which is the most promising path? What can we expect from future research?

The next page is: The limits of machine learning in "Supervised Learning"If you've found a great #domain, but it's already registered, then you'll want to reach out to the owner to see if… https://t.co/h7H5cP1Ygh

— MarkUpgrade Thu Jun 03 15:57:15 +0000 2021

1/4 page